- The Atomic Builder

- Posts

- Beyond Speed: Why Governance Is Now AI’s Competitive Edge

Beyond Speed: Why Governance Is Now AI’s Competitive Edge

As AI accelerates, the winners will be those who innovate boldly and govern wisely.

Two stories from the same week tell us everything about where enterprise AI really stands.

In San Francisco, OpenAI’s Dev Day unveiled the next wave of capability - agents, reasoning models, multimodal inputs, and an agent platform built to make AI easier to deploy.

In Canberra, Deloitte agreed to refund the Australian government after an AI-assisted report contained fabricated citations and references - the result of a process gap, not bad intent.

Two headlines. Two sides of the same truth:

AI isn’t just moving fast.

It’s moving faster than most organisations are ready to govern.

The New Reality: Innovation Outpacing Readiness

OpenAI’s Dev Day showed us what’s coming - a world where AI isn’t just answering, but acting with the introduction of agents.

Yet this same capability raises new questions about trust, validation, and oversight.

The Deloitte example doesn’t make them an outlier - it makes them a case study for the entire industry.

It’s what happens when traditional quality assurance processes, built for human authors, meet new AI workflows that can fabricate information with confidence.

No bad faith. Just a gap between AI innovation and organisational readiness.

To their credit, Deloitte has also doubled down on responsible AI - expanding its partnership with Anthropic to train 15,000 professionals and embed governance at scale.

Why This Matters for Leaders

AI transformation is no longer about whether to adopt AI, but how to absorb it safely.

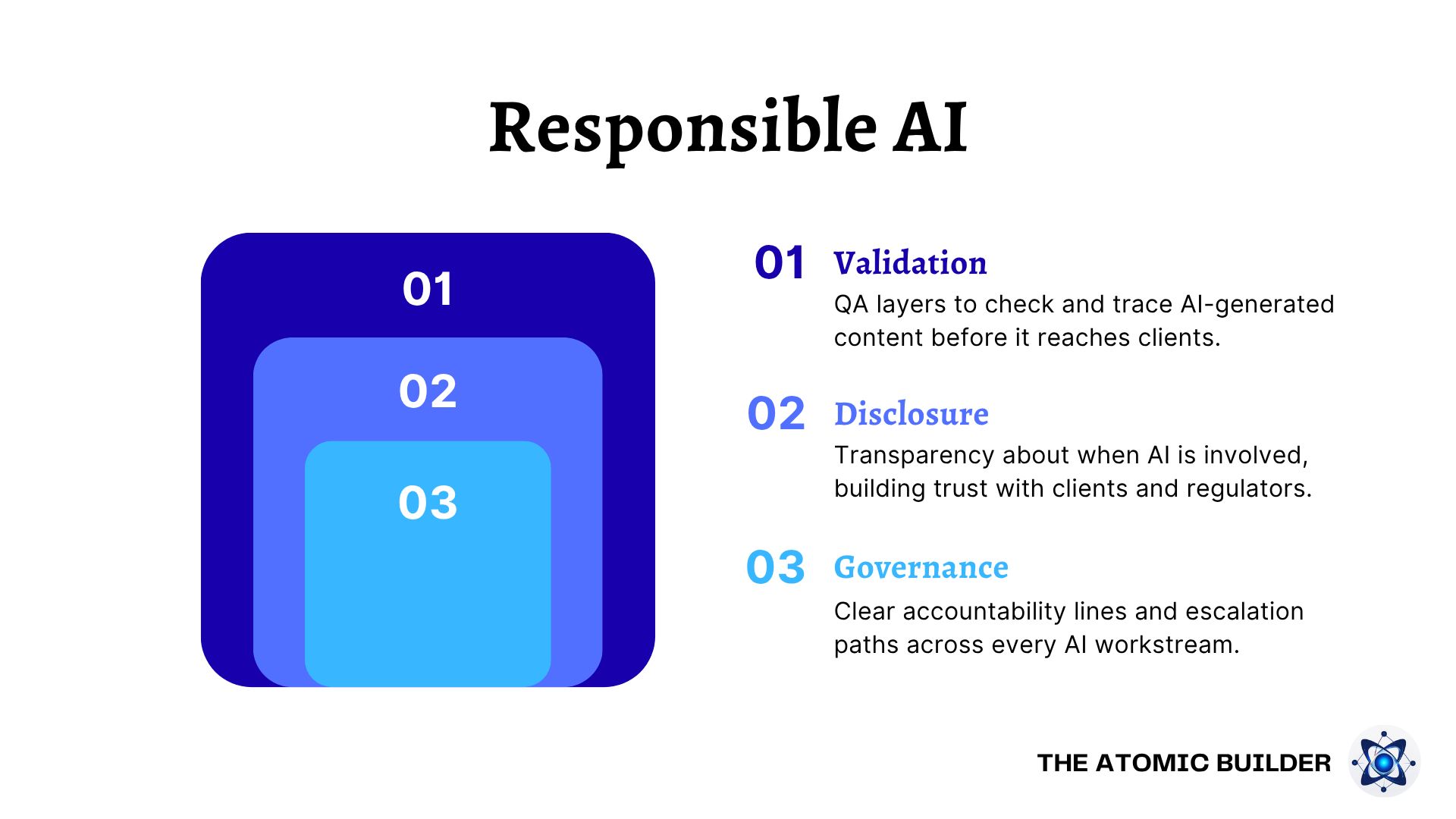

In my work with enterprises, I see three capability gaps repeating everywhere:

Validation — Enterprises need QA layers to check and trace AI-generated content before it reaches clients.

Disclosure — Clients, regulators, and the public expect transparency about when AI was involved.

Governance — Most AI workstreams operate without clear accountability lines or escalation paths.

Three Layers of Responsible AI

These aren’t blockers — they’re accelerators. Enterprises that operationalise these three layers turn AI risk management into a strategic advantage.

When embedded early, they allow teams to scale AI faster without losing trust.

The Balance to Strike

But governance isn’t about slowing things down - it’s about scaling safely. The organisations that get this right make responsibility operational, not theoretical.

Nearly every major enterprise now has (or should have…) an AI governance framework, risk policy, or ethics charter.

The challenge isn’t writing those principles - it’s making them real: ensuring they shape how teams design prompts, validate outputs, and sign off deliverables.

We can already see what this looks like when done well:

Microsoft embeds its Responsible AI Standard across every product division. Their Office of Responsible AI and Aether Committee sit within engineering workflows, not above them — ensuring governance happens in the build, not after release.

PwC established internal AI Model Review Boards to evaluate explainability, bias, and documentation before models reach clients. It’s a mechanism that turns principles into process.

Air Canada’s chatbot case shows the other side of that coin — when an AI-generated response went unchecked, the company faced legal and reputational fallout. It’s not malice or neglect; it’s what happens when governance hasn’t yet filtered into frontline use.

These examples share a common lesson:

AI maturity isn’t measured by the number of models you deploy — it’s measured by how far accountability travels inside your organisation.

The goal for leaders is to make responsibility self-reinforcing:

Governance that’s visible, not buried in policy.

Validation that’s built into every workflow.

Oversight that empowers, not slows.

When governance becomes part of how people work, trust in what you do, grows naturally alongside innovation.

So, where do you start?

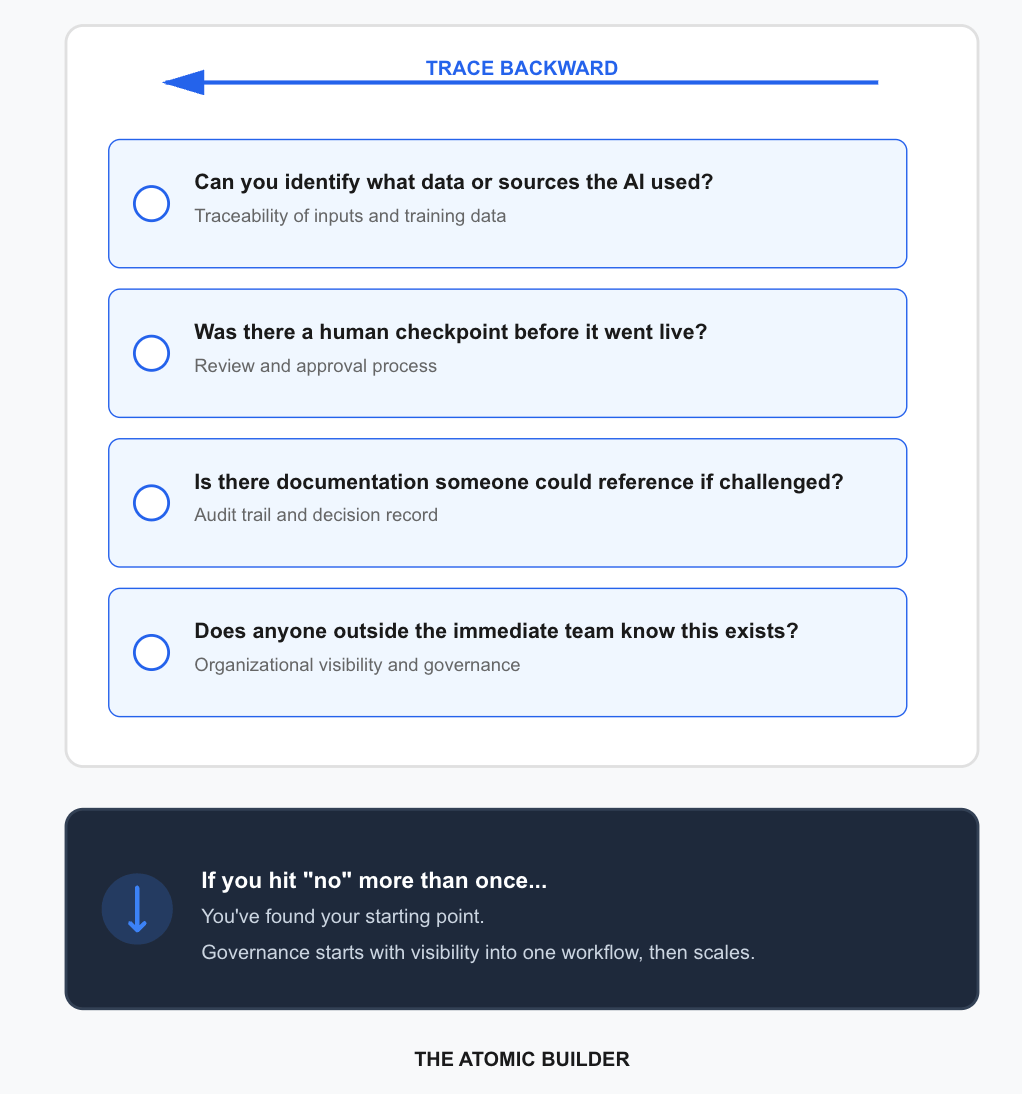

One Audit To Run This Week

Pick your highest-stakes AI workflow - the one touching clients, revenue, or compliance. Then trace a single output backward:

Can you identify what data or sources the AI used?

Was there a human checkpoint before it went live?

Is there documentation someone could reference if this output is challenged?

Does anyone outside the immediate team know this AI workflow exists?

If you hit "no" more than once, you've found your starting point.

Governance doesn't require a committee - it starts with visibility into one workflow, then scales from there.

The Takeaway

The future of enterprise AI isn’t about choosing between speed and safety. It’s about building both into your operating model.

Organisations that master both will define the next phase of AI transformation.

I hope this week gave you a clearer way to think about AI’s balance — and how innovation and accountability must evolve together. See you next week! Faisal |  |

P.S. Know someone else who’d benefit from this? Share this issue with them.

Received this from a friend? Subscribe below.

The Atomic Builder is written by Faisal Shariff and powered by Atomic Theory Consulting Ltd — helping organisations put AI transformation into practice.