- The Atomic Builder

- Posts

- Five AI Experiments You Can Run Today

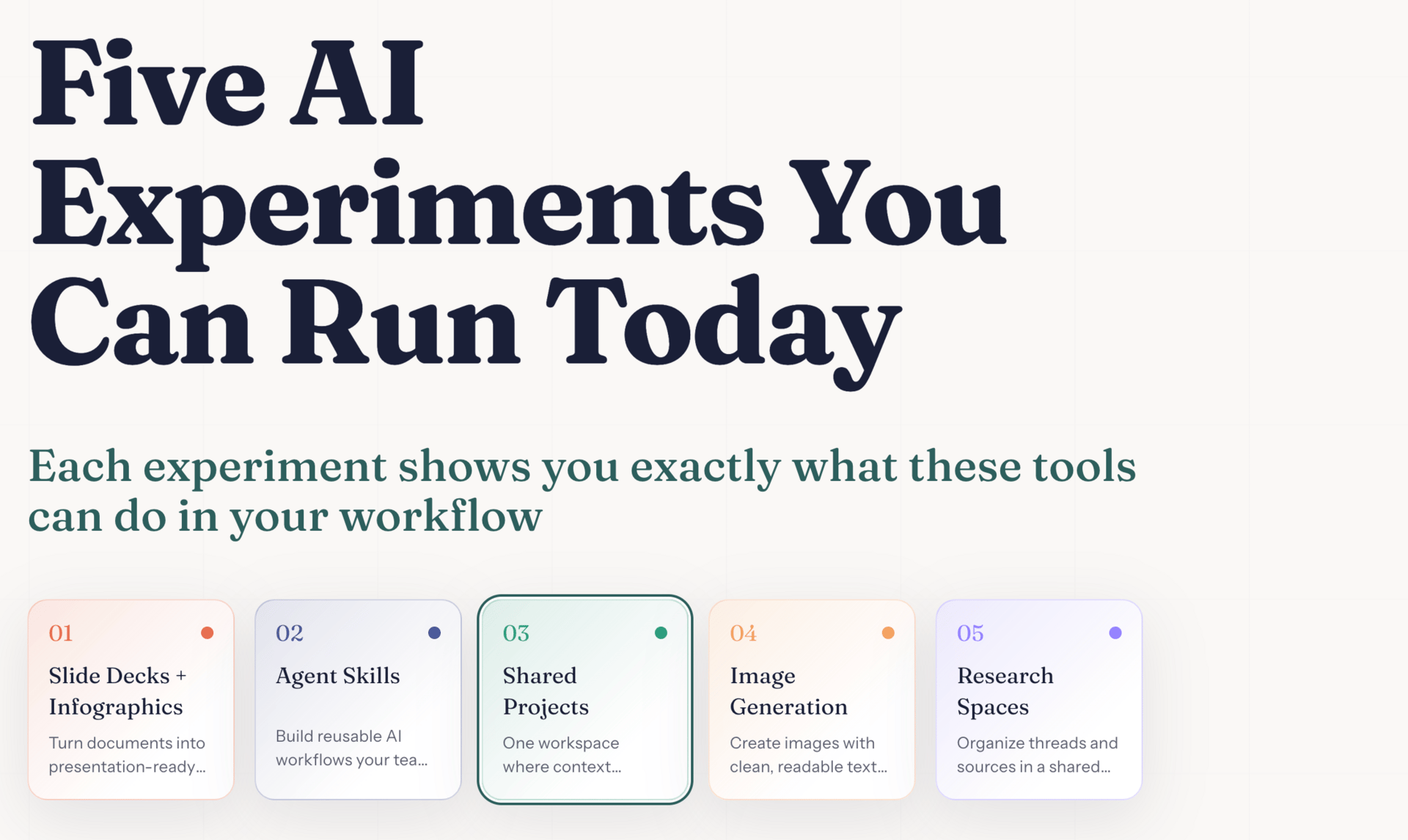

Five AI Experiments You Can Run Today

Each experiment shows you exactly what these tools can do in your workflow

The December issue of this newsletter about AI capabilities that changed in 2025 was the most-read of the year.

Was this newsletter forwarded to you? Sign up to get it in your inbox.

But here's the thing: reading about AI capabilities and actually using them are completely different.

So I spent the last week building something to help you further, a lab where I will help you try each capability yourself. Five experiments. Thirty minutes each. Copy-paste prompts included.

No course to buy. No theory to absorb. No vague promises about "AI transformation."

The gap between reading about AI capabilities and actually testing them is where most people get stuck. Companies spend months debating which tools to adopt when they could just run a 30-minute experiment and know for sure.

This experimentation ‘lab’ I created closes that gap. Five experiments. Thirty minutes each. Very quickly, you'll know which capabilities work for your workflow.

Access it here: lab.atomictheory.ai

The Five Experiments

Each one covers a capability that changed in 2025, not theory, but tools you can use right now. NotebookLM went from "interesting research tool" to "instant deck generator." Claude Skills turned prompts into reusable workflows. ChatGPT Projects made team context persistent. Nano Banana finally (!!) created legible text in AI images. Perplexity Spaces became living research hubs.

Here's what you can try:

Slide Decks + Infographics

Turn any document into presentation-ready slides in 30 seconds using NotebookLM. I tested this with a 20-page strategy doc. I got back a 5-slide executive version and a 12-slide team version. No manual screenshot cutting. No reformatting. Just upload, generate, done.

The experiment walks you through exactly what works best, what to expect in the output, and how to know if it's ready to use.

Reusable Workflows

Encode your recurring tasks as Claude Skills so they run consistently across your team. This solves the "good prompts in Notion that nobody uses" problem. Instead of documenting processes, you build workflows people can actually repeat without thinking about it.

The experiment shows you how to turn one manual task into an automated skill—competitive research, meeting prep, whatever takes you 2 hours every week.

Team Workspaces

Set up persistent context in ChatGPT Projects where your team can work asynchronously without losing the thread. Replace those email chains where the 5th person asks the same question that was answered in message 2.

The experiment builds a project workspace for a specific use case, then shows you the difference between this and regular ChatGPT conversations.

Production Visuals

Generate diagrams, mockups, and campaign assets with Nano Banana Pro. The first AI image model that creates clean, legible text. Actual design-ready outputs you can use.

The experiment gives you an example diagram to create (you can modify this should you choose!) so you can see what it handles well and where it still needs human refinement.

Living Research

Build research hubs in Perplexity Spaces that accumulate insights over time instead of starting from scratch with every question. Your competitive intel stays current automatically instead of going stale in a deck.

The experiment sets up a Space for a specific research area, then shows you how it compounds value over weeks instead of being a one-time search.

What Makes This Different

Each experiment has the same structure:

What the capability is and how you can use it

An example prompt to use (copy-paste ready, no modifications needed)

What you'll get (specific output)

Success criteria (you'll know if it worked or needs iteration)

There's also a "common mistake" callout for each one - the thing that trips people up when they first try it. I learned these by watching teams test these capabilities. Saves you 20 minutes of troubleshooting.

How to Use the Lab

Pick the capability that solves your biggest current friction point, the one that would free up the most time or remove the most manual work.

Try that experiment this week.

If it works, you've freed up capacity. If it doesn't, you've learned what "production-ready AI" looks like in your context. Either way, you're ahead of where you were Monday.

Then, if it works, figure out how to make it operational. Can your team use this consistently? Where does it fit in your existing workflows?

The lab shows you what's possible. That next step, implementation across your company, is what comes next.

Try the experiments: lab.atomictheory.ai

Need help making this operational?

These experiments show what's possible. Implementing them systematically across your company, figuring out which capabilities map to your processes, running successful pilots, building the foundation to scale what works - that's where I can help.

You can work with me directly through Atomic Theory for AI guidance and implementation, or talk through your specific challenges with Atom, my AI assistant who knows my frameworks and can help you get started, before you decide to speak with me.

Learn more: atomictheory.ai

Or just reply to this email. We can talk through what makes sense for your situation.

If you try one of the experiments, I’d love to hear how it went. Reply and let me know, what worked, what didn't, what surprised you. These learnings help me build better tools and write better issues, for you. See you next week! Faisal |  |

P.S. Know someone else who’d benefit from this? Share this issue with them.

Received this from a friend? Subscribe below.

The Atomic Builder is written by Faisal Shariff and powered by Atomic Theory Consulting Ltd — helping organisations put AI transformation into practice.