- The Atomic Builder

- Posts

- The AI Capabilities You're Not Testing

The AI Capabilities You're Not Testing

This is how you avoid rework in 2025

You planned your AI year in January: doc analysis, summarisation, categorisation, RAG and you probably rolled out Microsoft copilot...

Then last week you saw real-time video understanding, sub-second voice agents, and thinking models that obsolete your rules engines. None of it made the roadmap - not because it isn't valuable, but because it didn't exist (or wasn't obvious) when you planned.

That's the discovery gap: AI moves faster than annual cycles. If no one's scanning and prototyping what's newly possible in your org, you ship a good solution while a better one sits one tab away in Google AI Studio. Experimentation is key.

This issue is about fixing that: run two-hour discovery sprints in AI Studio to validate today's capabilities before you brief UX or commit engineering.

This is how you avoid rework in 2025.

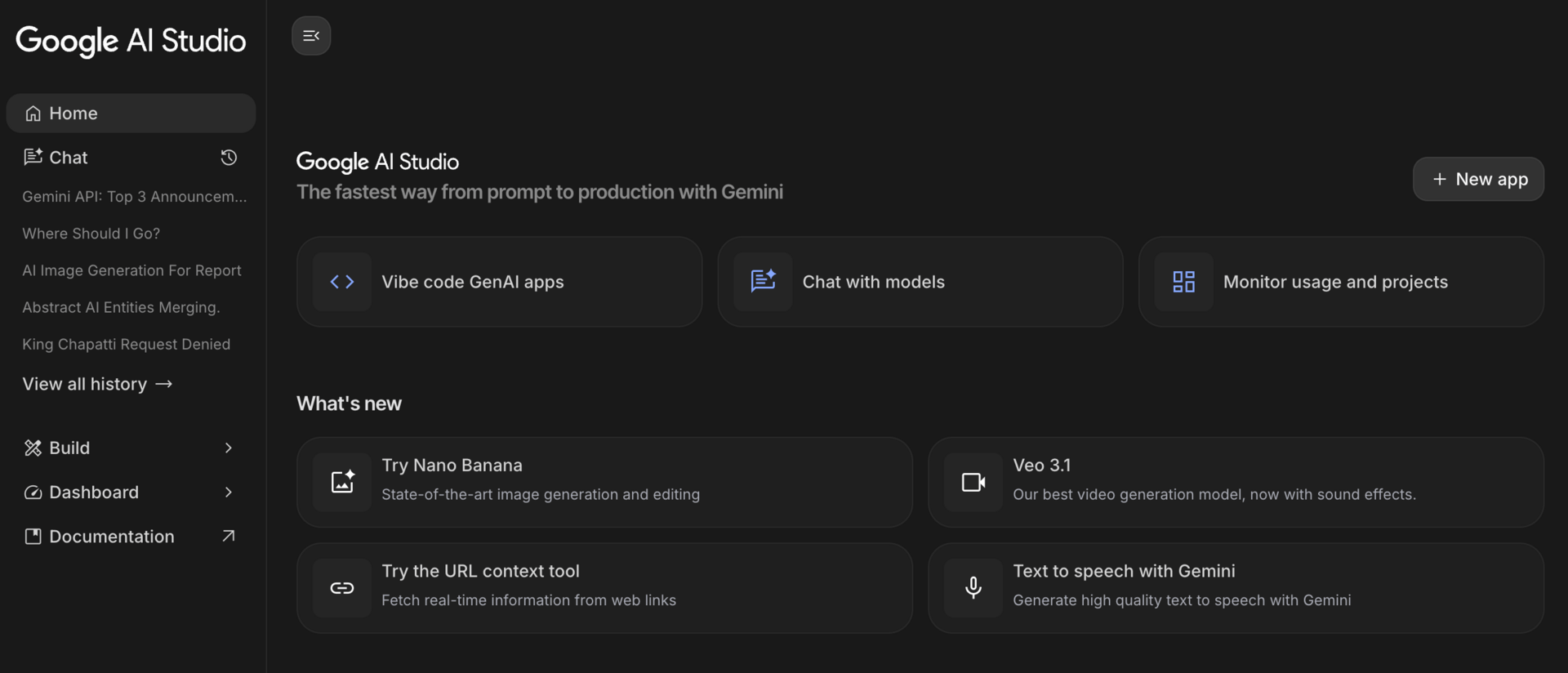

The Google AI Studio playground

What you see when you open AI Studio (that's not on your roadmap)

Open aistudio.google.com and look at the "Build" gallery. You'll see capabilities that probably didn't make your annual planning:

Conversational voice apps with Gemini Live

Image animation with Veo 3

Real-time search grounding for current information

Location-aware agents using Maps data

Video understanding that analyses footage frame-by-frame

Text-to-speech with contextual understanding

Image generation at precise aspect ratios

Flash-Lite models with sub-second response times

‘Build’ helps you understand what capabilities exist today

Most product leaders see this and think "interesting demos." The smart ones think "which of these solves a problem I'm currently planning to build a different way?"

Five things you can prototype in AI Studio today (that most teams don't know about)

Stop thinking of AI Studio as a demo environment. It's a rapid prototyping tool that lets you validate capabilities before you write briefs or commit resources. Here's what's available right now:

1. Video understanding (analyse footage, don't just transcribe it)

In AI Studio: Upload video, ask Gemini to identify patterns, extract key moments, generate summaries, spot anomalies.

Two-hour test: Upload five sales call recordings. Ask: "What objections come up most frequently across these calls?" See if it catches patterns your team currently analyzes manually.

What you're validating: Does video understanding work for your specific use case, or is it just impressive in demos?

2. Real-time audio transcription with contextual understanding

In AI Studio: Not just speech-to-text, but transcription with summarisation and context as audio plays.

Two-hour test: Record a team meeting. Test transcription accuracy with your industry terminology. Check if it captures technical terms, acronyms, context.

What you're validating: Is transcription quality good enough to build features around, or does it break with your vocabulary?

3. Thinking mode for complex reasoning

In AI Studio: Toggle "Think more when needed" for queries requiring deeper analysis. Takes longer, produces better reasoning.

Two-hour test: Give it a complex business scenario requiring strategic analysis. Compare standard mode vs. thinking mode output quality.

What you're validating: Does the quality improvement justify the latency for your use case?

4. Precise image aspect ratio control

In AI Studio: Generate images at exact dimensions - vertical phone wallpapers, horizontal banners, product shots at your exact specs.

Two-hour test: Generate ten product lifestyle images at your banner dimensions. Test brand consistency, quality, usability.

What you're validating: Is generated imagery good enough for your brand standards, or just "interesting"?

5. Flash-Lite for sub-second conversational responses

In AI Studio: Gemini 2.5 Flash-Lite delivers near-instant responses for conversational AI that feels truly real-time.

Two-hour test: Build a simple conversational interface using Flash-Lite. Measure actual latency with your typical queries.

What you're validating: Is latency fast enough to change your UX expectations, or just marginally better?

The pattern: Spend two hours in AI Studio with real examples from your domain. Answer one question: Does this capability fundamentally work for my use case? That answer changes what you brief teams to build.

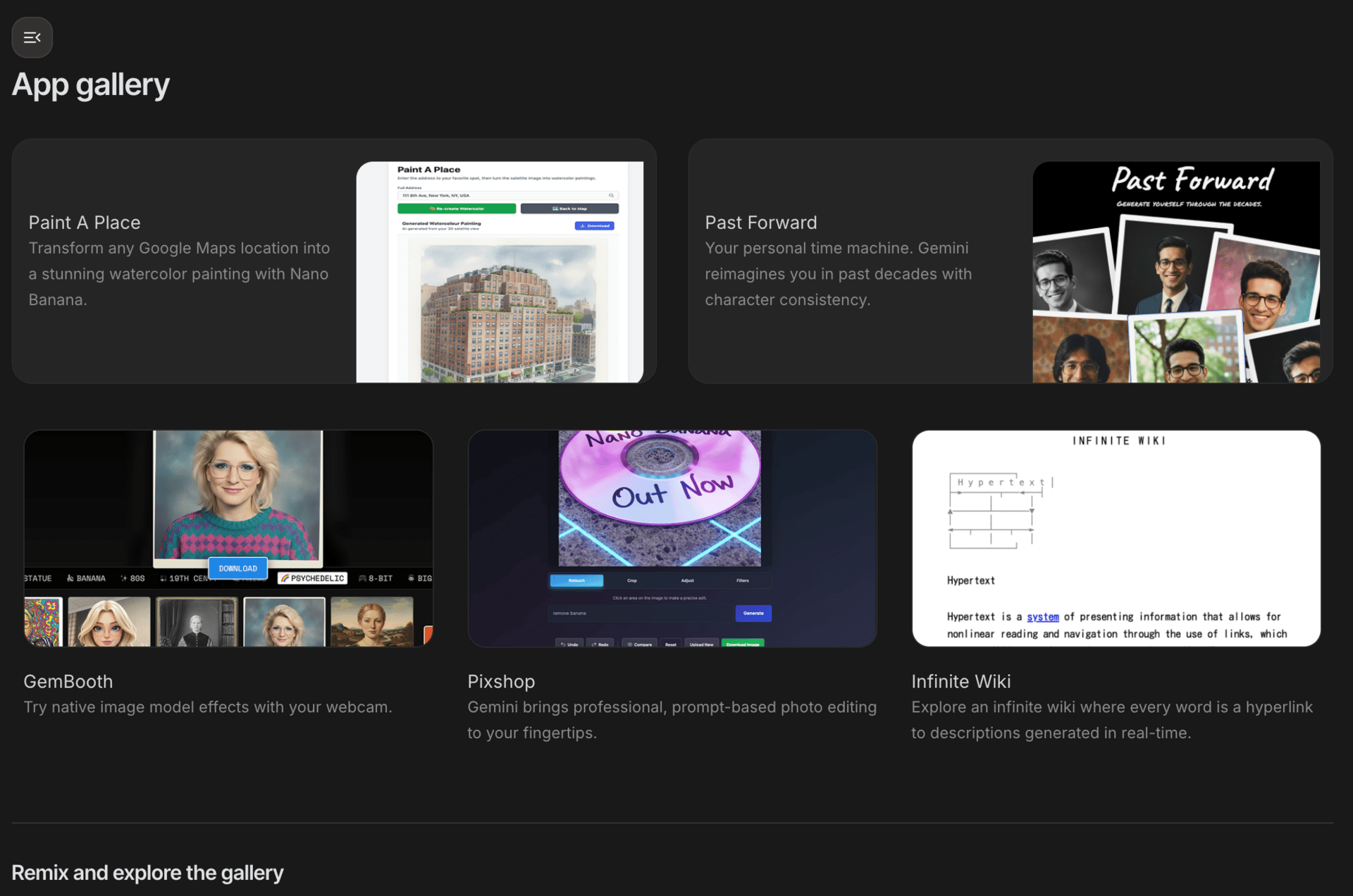

The app gallery is a great place to get a feel for what’s possible. You can also remix these apps - it’s a good way to start and learn.

Remix one of the pre-made apps to rapidly create something new

What this looks like: Two hours in AI Studio

Let me walk through two examples. One is a hypothetical enterprise use case showing the discovery process. The other is Aura - the meditation app I actually built in AI Studio to explore voice and multilingual capabilities. Both follow the same pattern: open AI Studio, test with real examples, discover what works.

Example 1: Field service video analysis

A manufacturing company's field service team captures hundreds of equipment repair videos but rarely reviews them for training.

What they could do in AI Studio:

Hour 1 - Discovery:

Clicked "Video understanding" in the gallery

Uploaded ten repair videos from different technicians

Prompted: "Identify common troubleshooting steps across these videos"

Tested: "Flag any instances where safety protocols are skipped"

Hour 2 - Validation:

Tested with poor lighting, different angles, various video quality

Found minimum requirements: 720p resolution, adequate lighting

Documented what worked: pattern identification, safety checks

Documented what broke: extreme angles, very poor lighting

The brief they handed to UX and Engineering:

"Video analysis fundamentally works - tested in AI Studio with ten real examples. Can auto-generate training clips and flag safety issues. Minimum specs: 720p, adequate lighting. Here are the exact prompts that worked, here are edge cases that need handling."

Result: Engineering built production system with validated requirements. UX designed around constraints discovered in AI Studio, not guessed. Shipped in eight weeks.

Example 2: Aura. What I built in AI Studio exploring voice + multilingual

I wanted to see what's actually possible with voice and personalization. So I spent a Saturday afternoon in AI Studio building Aura - a meditation app.

What I did in AI Studio:

Started with "Create conversational voice apps" template. Built an interface where you describe your mood, pick a duration (short/medium/long), and get a personalised guided meditation generated in real-time.

A simple UI to test the capabilities

What I learned building in AI Studio:

Voice quality is production-ready (and comparable to services like ElevenLabs). Natural pacing, calming tone, proper meditation cadence. I found this by actually building and testing, not reading documentation!

Multilingual switching is instant. Same quality across languages. No degradation. Discovered by clicking through language options and listening.

Support multiple languages with apps easily. English…

…Hindi, or whatever you need for your demographic

Why this matters beyond meditation apps:

Building Aura in AI Studio revealed capabilities I immediately connected to work problems:

Customer onboarding in users' native languages

Voice-based accessibility for products

Personalised training content at scale

Hands-free interfaces for field workers

You can try Aura yourself. I built it to explore, but more importantly - building it in AI Studio taught me what's possible in ways reading docs never would.

The point: AI Studio isn't just for validating work ideas. Build something personal. The discoveries translate to enterprise applications you'd never imagine in requirements meetings.

How AI Studio changes what you hand to teams

Two hours in AI Studio answers the questions that usually take weeks to figure out during build:

Be a friend to UX: "Here's what actually works" - real examples tested, constraints discovered, edge cases documented

Be a friend to Engineering: "This is technically feasible" - validated with real data, performance characteristics known, requirements clear

The discovery sprint in AI Studio:

Pick a capability you didn't know existed

Build a basic prototype with real examples

Test what works, what breaks, what constraints matter

Document findings

That becomes your brief. UX and Engineering start with validated capabilities, not assumptions to figure out while building.

Stop reading about AI capabilities. Go test them.

Open aistudio.google.com.

Option 1 - Validate a work idea (2 hours):

Pick something you're planning to build or currently building. Check the AI Studio gallery: Is there a capability that would change your approach?

Click "Build" → Browse the templates → Pick one relevant to your use case → Upload real examples → Test (with data you are permitted to use(!) → Document what works and what doesn't

That's your brief. You just validated (or invalidated) an assumption before committing resources.

Option 2 - Build something personal (Saturday afternoon):

Build a tool you'd actually use. Pick a problem you want to solve. Something small, something fun, something you're curious about.

The capabilities you discover building for yourself reveal enterprise applications you'd never find in requirements docs. That's how Aura happened - exploring voice and multilingual led to ideas about accessibility, onboarding, training content.

Make this a monthly habit:

First Tuesday of every month: Spend two hours in AI Studio checking "What's new." Test one capability that didn't exist last quarter. Ask: Does this change what we're planning to build?

AI evolves faster than roadmaps. Make AI Studio your scanning mechanism - not just a prototyping tool, but your early warning system for capabilities that obsolete current plans.

The Real Shift

So, where are we? Well, from annual AI planning based on January's capabilities to continuous discovery of what's newly possible.

From "we'll build what we planned" to "we validated a better approach in AI Studio before we started".

Go to aistudio.google.com. Pick something from the gallery. Build for two hours. See what you discover.

If you build something interesting or find a capability that changes your product thinking, I'd love to hear about it. Reply to this email or find me on LinkedIn.

Need help turning AI discoveries into organizational delivery? That's what I do with Atomic Theory Consulting - strategy, governance, and change management that moves from prototype to production. Get in touch. See you next week! Faisal |  |

P.S. Know someone else who’d benefit from this? Share this issue with them.

Received this from a friend? Subscribe below.

The Atomic Builder is written by Faisal Shariff and powered by Atomic Theory Consulting Ltd — helping organisations put AI transformation into practice.